There’s a lot of conversation around AI at the moment. Depending on who you ask, it’s either the future of creativity or the end of it. But when we look specifically at audio post production for film, TV, and games, the reality is quieter, steadier, and far less dramatic than the headlines suggest.

In post, AI isn’t replacing sound designers, editors, or mixers.

It’s not generating creative direction, emotion, or performance.

What it is doing is helping with the parts of the job that are repetitive, time-consuming, or simply tedious — cleaning dialogue, removing noise, sorting files, and repairing audio issues that used to require hours of manual work.

The human element — the sense of timing, storytelling, rhythm, texture, and emotional sensitivity, remains completely untouched. That’s the part only a person can do.

And that’s not changing.

In our services business, 344 Audio, we use machine learning tools where they genuinely help, never in place of creative judgment. And through our Audio Post Essentials sound design course, we teach sound designers how to work confidently in this environment, where creativity leads and tools simply support the vision.

TL;DR — Quick Summary

AI in audio post production refers to the use of machine learning tools that assist with dialogue cleanup, noise reduction, and audio organisation. These tools help speed up technical repair work and improve clarity, but they do not replace the creative judgment, emotional sensitivity, or storytelling role of the sound designer.

In audio post-production, AI supports the craft rather than reshaping it, allowing creatives to spend less time on repetitive cleanup and more time on the parts of sound that require taste, intention, and artistic perspective.

As the technology continues to evolve, the value of human creativity, interpretation, and emotional intelligence will only become more essential, not less, so there is no threat to the role of the sound professional, only new ways to enhance their work.

What is AI in Audio Post Production?

In audio post, when we talk about “AI,” we’re really referring to machine learning models that have been trained to identify and repair common audio problems.

These tools don’t invent creative choices.

They don’t “mix for you,” and they certainly don’t understand story.

Their role is narrow and practical:

- Removing background noise

- Repairing clipped or distorted dialogue

- Reducing reverb from on-location recordings

- Tagging or organising large audio libraries

They save time, increase clarity, and help achieve cleaner results — but the shaping of emotion and meaning still belongs entirely to the sound professional.

And unlike other creative fields where AI is moving quickly, audio post is adopting these tools carefully and deliberately, because clarity, realism, and performance integrity matter too much to rush.

Is AI Changing the Audio Post Workflow?

Traditionally, dialogue cleanup and noise repair were slow processes.

You’d work through audio second-by-second, detail-by-detail.

Machine learning allows parts of that cleanup to happen in real time, alongside the creative work.

This doesn’t replace the creative individual.

It just removes friction for them.

The sound designer is still shaping:

- The emotional pacing of a scene

- The presence or distance of a voice

- The texture and atmosphere of a world

AI simply clears the dust off the surface so the shapes underneath can be seen more clearly.

How AI is Actually Being Used in Audio Post

Dialogue Editing & Cleanup

Tools like iZotope RX and Acon Digital can detect patterns in unwanted noise — clicks, hums, buzz, HVAC rumble, harshness — and reduce them without damaging the performance.

This is precision repair, not creativity.

The dialogue editor still decides:

- What to keep

- What to soften

- What emotional tone the performance needs

ADR & Voice Continuity

When an actor can’t return to the studio, tools like ElevenLabs can help match tone or phrasing (assuming you have the legal consent to do so).

They’re continuity tools, not replacements for human performance.

Procedural Foley & SFX Support

Some systems can generate or adapt movement-based sounds (like footsteps) to save time in early scene blocking.

But the feel, texture, and intention are still handcrafted by the sound designer, and nothing can replace the real performance of a foley artist.

Archival or Documentary-Based Restoration

Machine learning can help rescue damaged historical recordings, allowing sonic history to be heard again.

This is one of its most beautiful uses.

The Best AI Tools for Audio Post Production

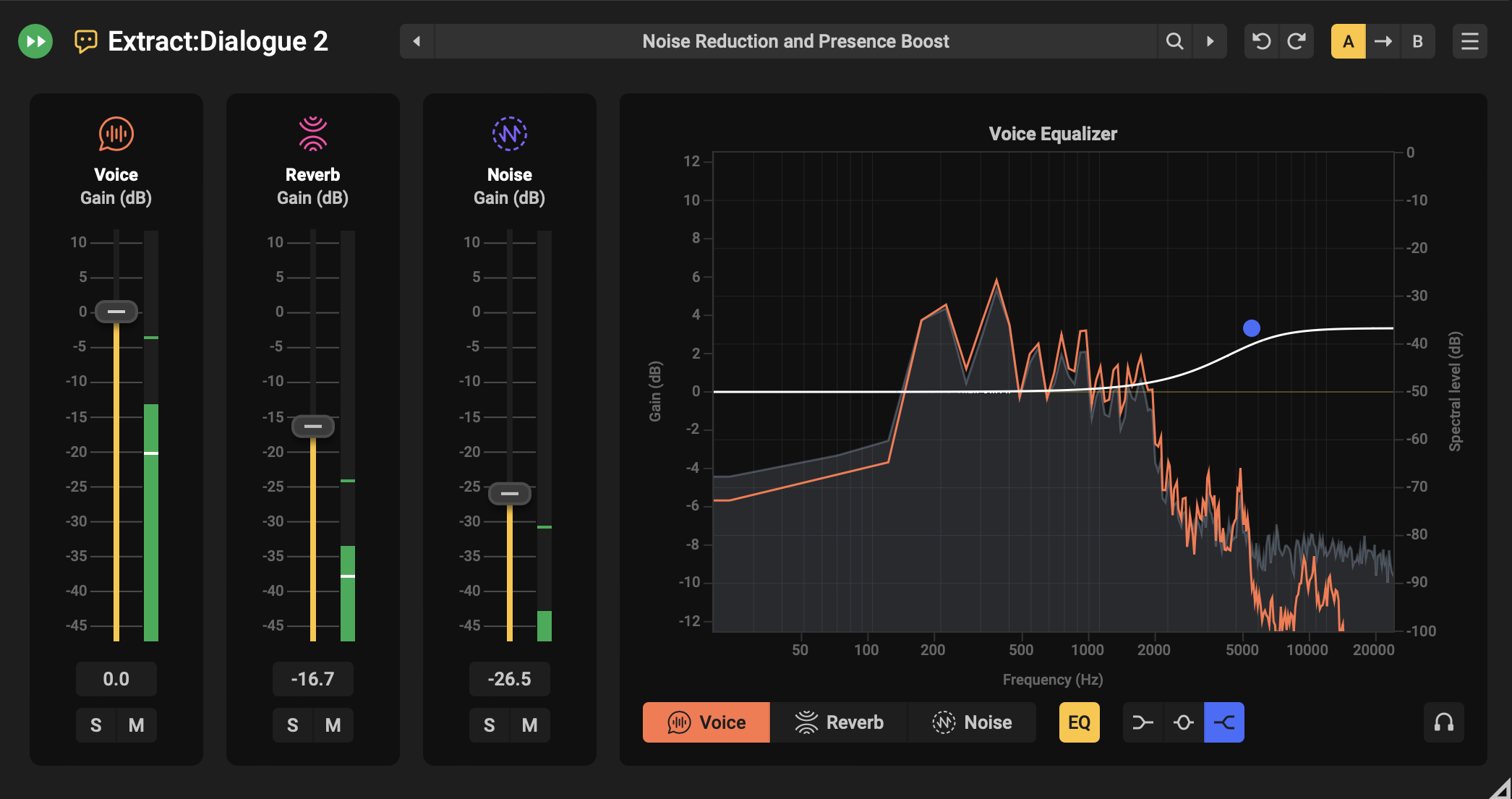

iZotope RX 11 - Industry-Leading Machine Learning Repair Suite

A cornerstone of professional post-production, iZotope RX 11 uses machine learning models for audio restoration. These models detect and remove hums, clicks, and other artefacts with surgical precision while preserving natural tone and clarity.

RX 11 is the standard working example of how machine learning supports, not replaces, the creative judgment of sound editors working in film, TV and game audio.

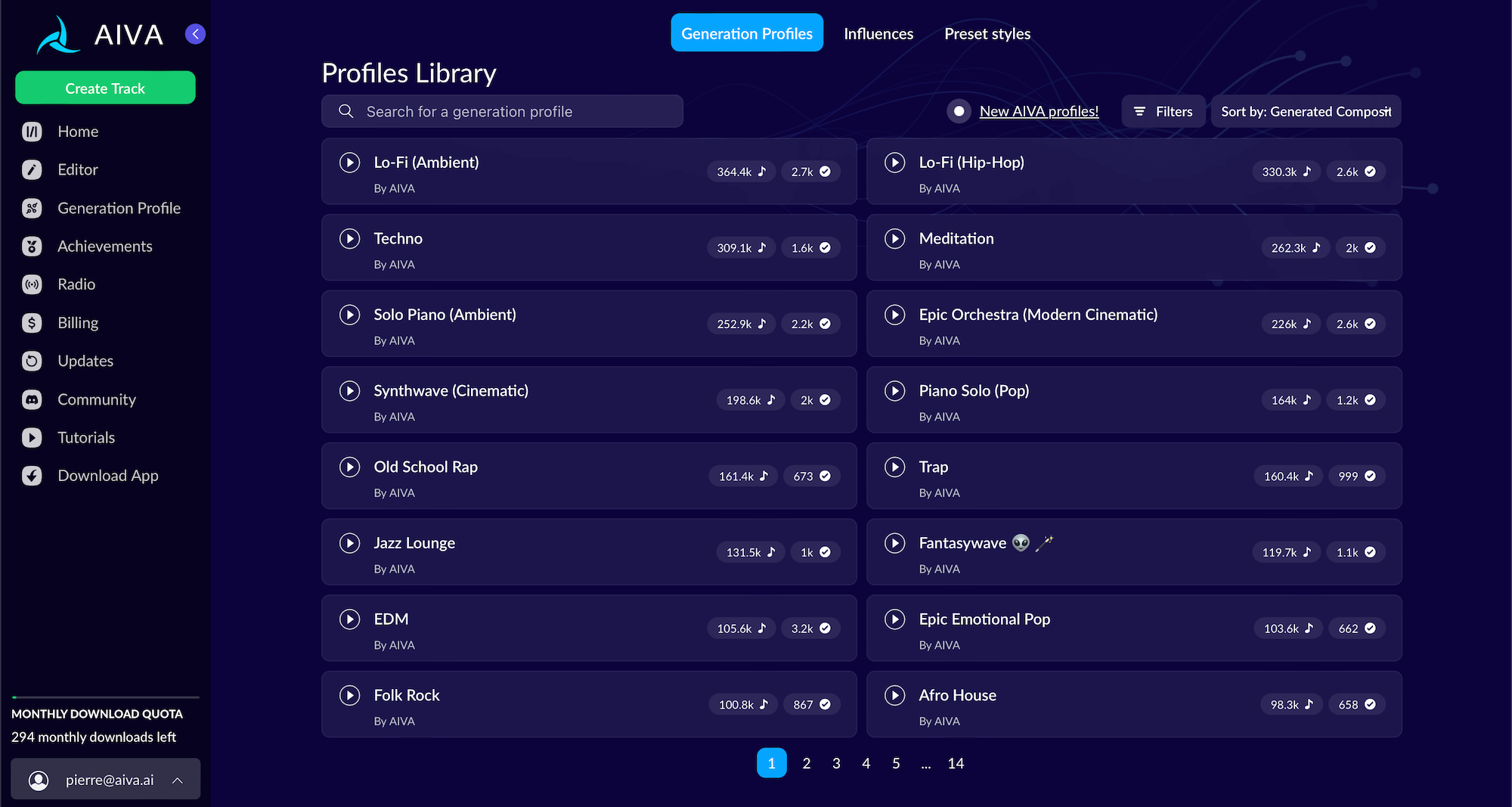

AIVA / Boomy / Soundful - Generative AI Music Creation

These composition tools can create quick, royalty-free music cues based on genre or mood. They are useful for prototypes or temporary soundtracks, but do not replace composers or sound designers.

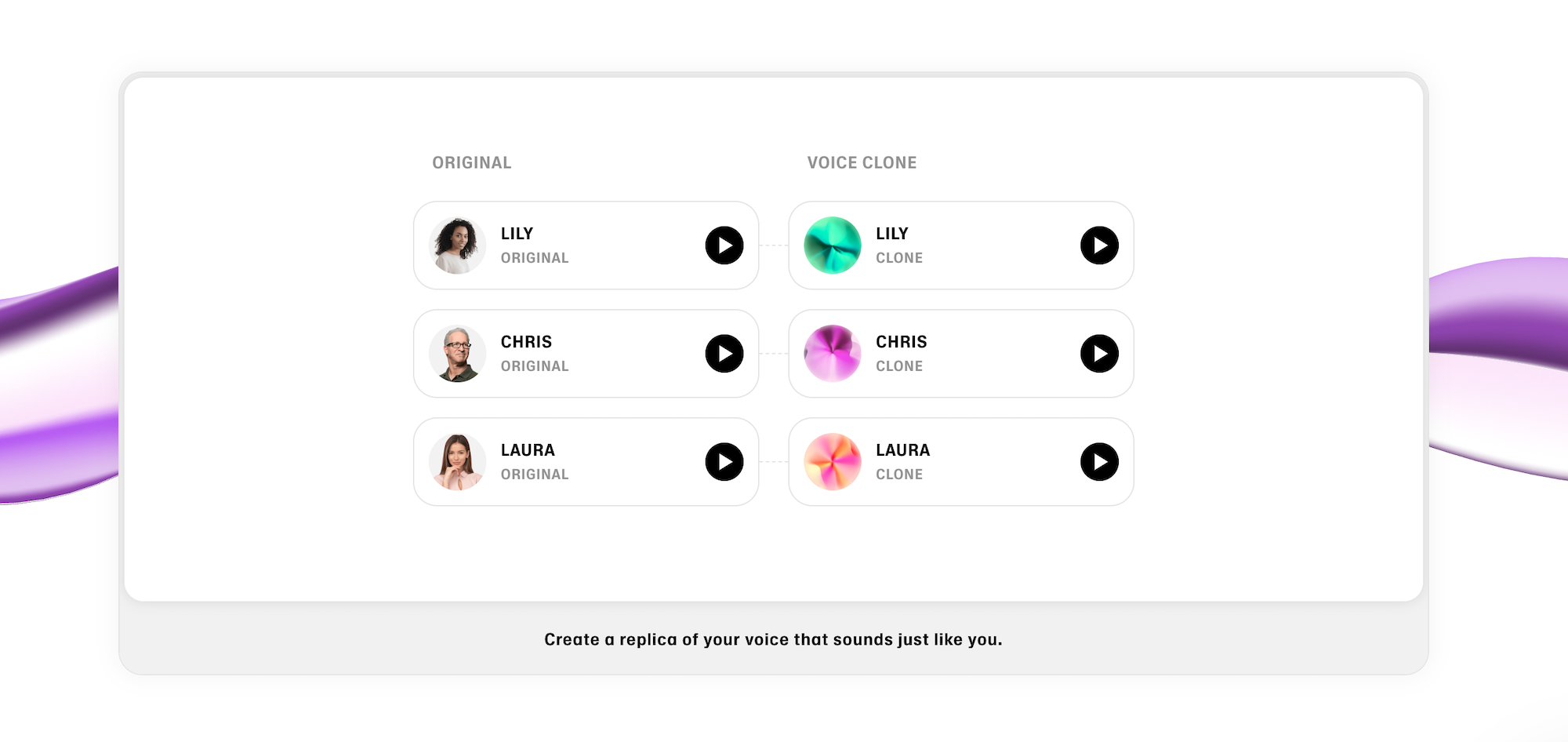

ElevenLabs - Voice Cloning and Synthetic Narration

Used responsibly, ElevenLabs can help re-create voices when ADR sessions are not feasible. They are carefully applied to maintain realism and ethical boundaries, ensuring continuity in dialogue-driven projects.

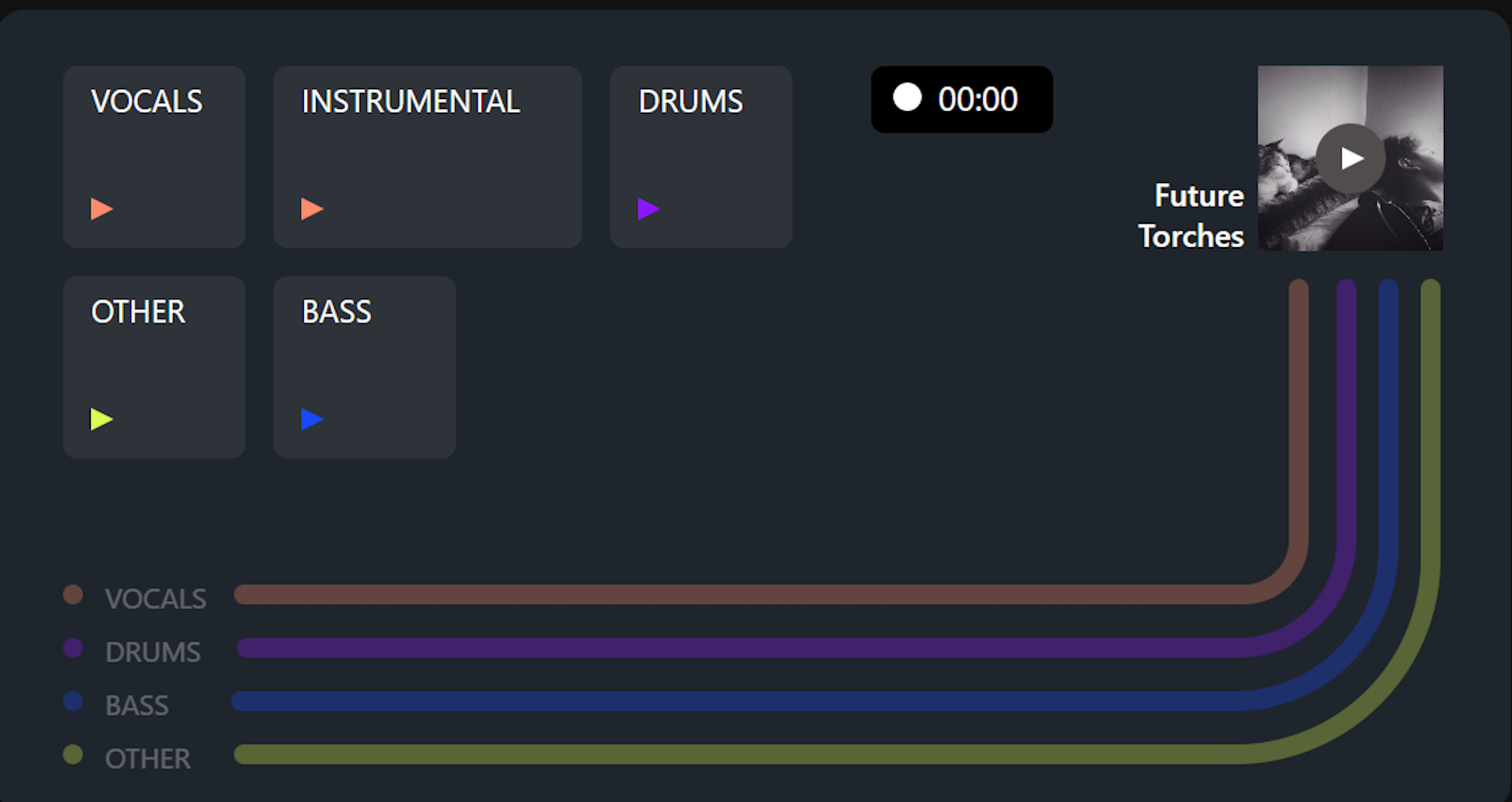

AudioShake / StemRoller - AI Source Separation

These systems isolate individual elements such as dialogue, ambience, or effects from a mixed track. They are valuable for restoration and adaptive game audio, where precise separation saves hours of manual editing.

Should You Fear or Embrace AI in Audio Post?

Let’s be honest, this is the question on everyone’s mind. Not because the technology is overwhelming the craft, but because it’s new, and new always creates uncertainty. Every sound designer, editor, mixer, and Foley artist has wondered at some point: What does this mean for my career? For my creativity? For my future?

The short answer is that you should embrace it, and here’s why…

What AI Helps With

- Faster cleanup: Machine learning tools can remove noise and repair dialogue quickly, so you spend less time fixing problems and more time shaping scenes.

- Less repetitive work: Tasks that used to be done by hand — like de-clicking or batch processing — can now run in the background while you focus on the creative choices.

- More time spent on creative thinking: When the technical friction is reduced, you have more mental space to experiment, try ideas, and listen more deeply to what the story needs.

- Clearer dialogue and better clarity overall: Automated repair allows the performance to come through more naturally, without distraction, so emotional detail isn’t lost under noise.

What AI Can't Do

- Feel emotion: A machine can clean or detect sound, but it can’t sense the mood, tone, or intention behind a performance.

- Understand dramatic pacing: Timing — when to hold, when to let silence breathe — is a storytelling instinct that only comes from a human ear.

- Tell a story through sound: AI can’t decide what a world should feel like; that’s a creative narrative choice made by the sound designer.

- Replace human taste: Taste is judgment, restraint, and meaning — the subtle decisions that define great sound work — and it remains entirely human.

Creativity hasn’t gone anywhere.

It’s just been given a little more room to breathe.

Learn to Work With AI, Not Against It

Understanding how to work with AI is becoming an important part of a sound designer’s toolkit, not because it replaces skill, but because it helps apply that skill more efficiently.

At 344 Academy, our Audio Post Essentials sound design course bridges traditional sound design training with practical exposure to modern workflows. We teach:

- Mixing, sound design, dialogue editing, Foley, and more

- How to work with new tools and emerging technology

- How to make creative decisions that give scenes emotional dimension

- The business side of audio post, including how to sell and market your services

The goal isn’t automation. It’s confidence.

Will AI Replace Sound Designers?

The short answer is no.

And it’s unlikely that it ever will.

Sound design is about:

- perspective

- meaning

- intuition

- creativity

- storytelling

Machines don’t possess any of those qualities.

AI replaces tasks, not talent.

We’ve seen this before:

- Digital workstations didn’t replace editors.

- Sample libraries didn’t replace Foley artists.

- Auto-conform didn’t replace dialogue editors.

The craft evolves.

The artist remains at the centre.

Our Honest Take

AI is not here to take the heart out of sound.

It’s simply making space for it.

The future of audio post production belongs to the people who:

- Understand storytelling

- Care about detail

- Listen deeply

- Trust their ears

- Use tools intentionally, not passively

Technology follows creativity, not the other way around.

If you keep developing your taste, your craft, and your understanding of sound, your value only increases, regardless of what new tools appear.

And if you want support learning those fundamentals, that’s what we’re here for:

Check out our Audio Post Essentials Sound Design Course.